Enterprise support teams are racing to adopt GenAI. Most are deploying it through chatbots, answer generation, or agent assistance. Few are stopping to ask the hard question:

Is GenAI delivering measurable business impact, or just generating content?

This is where most GenAI initiatives break down. Without governance, visibility, and accountability, AI quickly becomes operational noise. SearchUnifyGPT™ addresses this gap by transforming GenAI from an experimental capability into a measurable support system.

The Core Problem: AI Adoption Without Proof

Most Search and GPT implementations in support fail for three reasons:

- Generated answers without confidence or consistency

- No linkage between AI interactions and case deflection

- Limited visibility into whether agents trust or use AI

The result is AI that looks impressive in demos but collapses under enterprise scrutiny.

Support leaders do not need more generated answers. They need measurable outcomes, governed execution, and predictable impact.

SearchUnifyGPT™ was designed to operate differently. It embeds GenAI directly into the support ecosystem with context, control, and accountability built in.

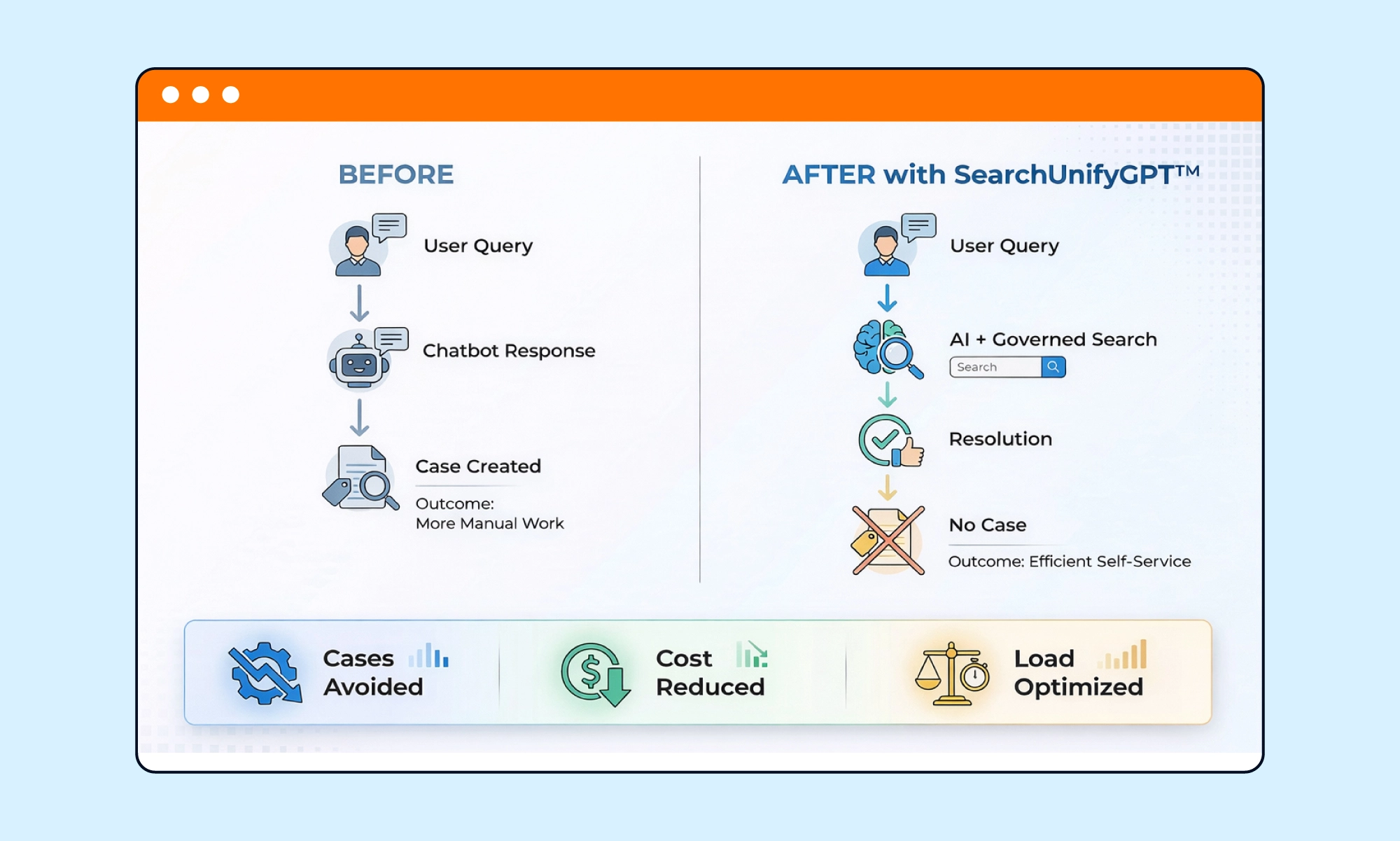

Measuring What Matters: Case Deflection with SearchUnifyGPT™

The success of GenAI in support is not measured by conversations. It is measured by cases that never get created.

SearchUnifyGPT™ works in conjunction with SearchUnify analytics to identify:

- Self-service journeys where AI-generated responses resolved intent

- Search sessions that ended without escalation

- Scenarios where GenAI assisted resolution but escalation still occurred

This enables support leaders to quantify case deflection with confidence, rather than relying on assumptions or proxy metrics.

For the first time, support leaders can confidently report how AI contributes to cost reduction, workload optimization, and moves from perceived value to provable ROI.

See how SearchUnifyGPTTM drives measurable deflection

Request DemoGovernance by Design: Controlled GenAI, Not Open-Ended Risk

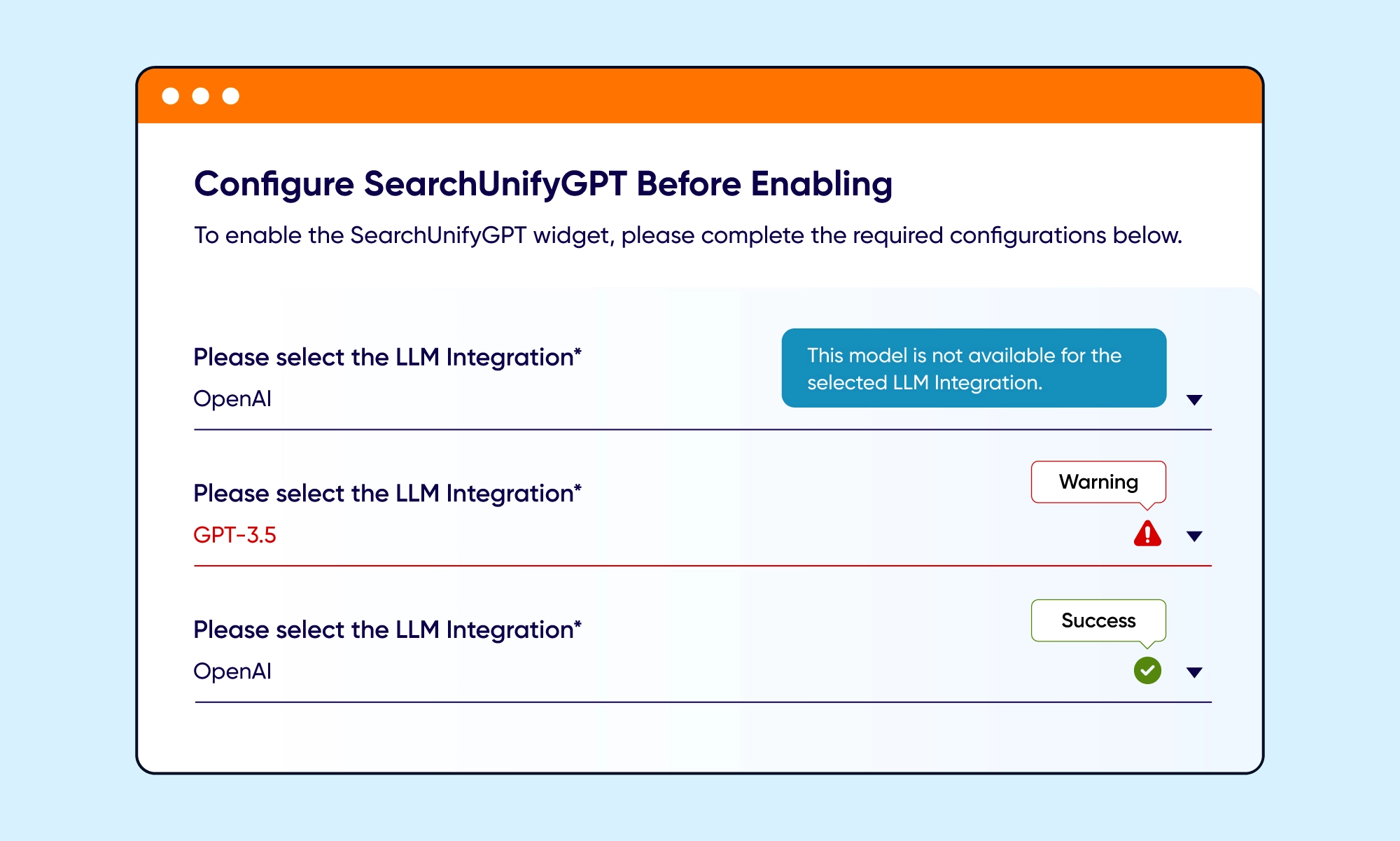

Enterprise support environments demand control. SearchUnify delivers configurable GenAI execution aligned with business constraints.

Organizations can:

- Select approved LLM providers like OpenAI, Gemini, Claude, and a specific model such as GPT-4.1 or GPT-5.1 for OpenAI

- Control when and where generative responses are invoked

- Maintain compliance, brand tone, and security posture

This eliminates the black-box problem that plagues most AI deployments. GenAI becomes a governed system component, not an unpredictable experiment.

Governance is not a blocker to innovation. It is what makes innovation deployable at scale.

From Enablement to Accountability: Customer-Level Visibility

AI value does not materialize unless Customers actually use it.

SearchUnify provides real-time analytics into adoption, behavior, and impact, including:

- Usage trends across AI features

- Engagement depth at the agent level

- Correlation between AI usage and resolution efficiency

This allows leaders to move beyond rollout metrics and manage AI as an operational capability. Adoption gaps become visible. Coaching becomes targeted. ROI becomes sustainable.

The Strategic Shift: AI as an Operating Model

What SearchUnify enables is not an incremental improvement. It is a structural shift in how AI is operationalized in support.

- AI usage is measurable

- AI outcomes are attributable

- AI behavior is governed

- AI adoption is visible

This is the difference between AI theater and enterprise-grade execution.

The Bottom Line

GenAI will not fail because the technology is weak. It will fail because enterprises deploy it without governance, accountability, and outcome measurement.

SearchUnify is setting a new standard where AI in support is:

- Measured by business impact

- Governed by design

- Optimized through data

AI that cannot prove its value does not belong in enterprise support.

AI that can is a competitive advantage.

Last Updated: January 19, 2026

Last Updated: January 19, 2026